One The process of scale development within psychological research is characterized by multiple phases, each integral to the creation of a valid and reliable measurement tool. One pivotal stage in this journey is the selection and reduction of scale items, a process deeply influenced by the assessment of content validity. This phase is crucial for refining the scale, enhancing its focus, and ultimately producing a measurement tool that accurately captures the intended construct.

Content validity, as previously discussed, plays a central role in content validity assessment. It is the degree to which scale items genuinely, comprehensively, and accurately represent the specific construct targeted for measurement. In essence, content validity ensures that the items within the scale are not only relevant but also closely aligned with the psychological trait, behavior, or attribute being assessed. The objective of content validity is to capture the true essence of the construct, leaving no critical aspect unexamined (American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, 2014).

The concept of content validity can be likened to capturing the true spirit of the construct within the measurement tool. It's about making sure that no critical aspect of the construct is left unexamined, and that the items within the scale are a faithful reflection of the targeted phenomenon. Without robust content validity, a scale might fail to serve its intended purpose, producing results that do not faithfully represent the construct under investigation (Clark & Watson, 2015).

The journey of content validity assessment offers valuable guidance on which items to retain within the scale and which to consider for removal. Content validity assessment involves expert judgment, an essential component that allows experts in the field related to the construct to evaluate the items within the scale. Their expertise is harnessed to critically assess whether the items accurately and comprehensively represent the construct. The process includes evaluating factors such as relevance, clarity, and overall representativeness of the items (Clark & Watson, 2015).

This phase of the scale development process is an iterative one, characterized by expert feedback and revisions to the scale items. Experts often provide feedback on the items, suggesting revisions or clarifications as needed. This iterative process helps to enhance the content validity of the scale, ensuring that the items are not only reflective of the construct but also intelligible and unambiguous.

Expert judgment, in this context, is more than a subjective evaluation. It's the culmination of years of knowledge and experience in the field, making it a reliable and objective tool in content validity assessment. The collaboration of experts ensures that the items effectively tap into the essential elements that define the construct, providing a strong foundation for the scale's development and effectiveness (Clark & Watson, 2015).

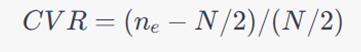

The assessment of content validity, often conducted through expert reviews, yields essential information for item selection. Items that receive favorable Content Validity Ratio (CVR) scores are deemed to be essential for accurately capturing the construct under investigation. A positive CVR value signifies consensus among experts regarding the relevance and necessity of the item. These items are retained in the scale, as they are considered vital for representing the construct comprehensively and accurately (Lawshe, 1975).

However, content validity assessment also serves another crucial purpose. It highlights potential redundancy within the scale. Redundant items can lead to respondent confusion and compromise the scale's efficiency. To mitigate this issue, items that consistently overlap or duplicate the measurement of the same facet of the construct are considered for removal. This process of item reduction streamlines the scale, resulting in a more concise and focused set of items, free from redundancy (Clark & Watson, 2015).

The significance of content validity in scale development has been widely recognized within the psychological and educational research communities. Various studies and publications have addressed the importance of content validity in the context of scale development.

For example, a study by Haladyna, Downing, and Rodriguez (2002) provides a comprehensive exploration of content validity as part of test development. The authors discuss the various elements of content validity, emphasizing the crucial role of expert judgment in evaluating items. Their work underscores the significance of aligning test items with the targeted construct, which is central to content validity.

In the realm of psychological research, O'Neill, Goffin, and Tett, (2009) explores content validity as an essential component of personality assessment. The study emphasizes the importance of expert judgment and theoretical alignment in creating personality measures with strong content validity. It highlights the need to develop measurement tools that accurately reflect the richness and complexity of personality constructs.

Content validity is not merely a technical aspect of scale development; it is the bedrock upon which the effectiveness and accuracy of psychological scales rest. Through a methodical process that engages expert judgment and the Content Validity Ratio (CVR), researchers ensure that their scale items genuinely and comprehensively represent the construct. The outcome of this rigorous assessment is a focused and reliable measurement tool that generates results aligned with the construct under investigation.

The importance of content validity resonates through psychological research, with a myriad of studies emphasizing its pivotal role. As researchers, by prioritizing content validity, we elevate the quality and impact of our research, ensuring that our measurement scales are robust, meaningful, and aligned with the constructs we aim to explore. In the ever-evolving landscape of psychological research, content validity remains a steadfast beacon, guiding us toward the creation of precise, reliable, and valuable measurement tools.