What are the potential biases associated with publication bias in meta-analyses?

Publication bias arises when studies with significant or positive results are more likely to be published than those with inconclusive or negative results, potentially distorting meta-analytical findings.

If a meta-analysis fails to use up-to-date methods, it can be as misleading as a good meta-analysis enlightens policymakers and researchers. A fundamental issue is publication selection bias and 'p-hacking ', which refers to manipulating data analysis until it produces statistically significant results, compromising the truthfulness of the findings. Out of the 107,000 meta-analyses published in 2022, more than half do not discuss publication bias at all. Because publication bias or p-hacking can easily exaggerate the typical reported effect size by two or more, meta-analyses that ignore publication bias may cause more harm than good (Irsova et al., 2023).

The exclusion of unpublished studies in systematic reviews may lead to the exclusion of critical evidence and result in biased, overly positive outcomes. This is a significant concern, as prior studies have suggested that meta-analyses that do not consider grey literature could overstate the effectiveness of interventions, potentially leading to misguided policies and ineffective interventions.

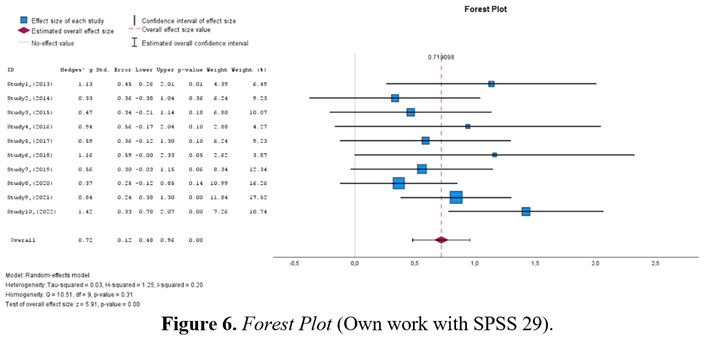

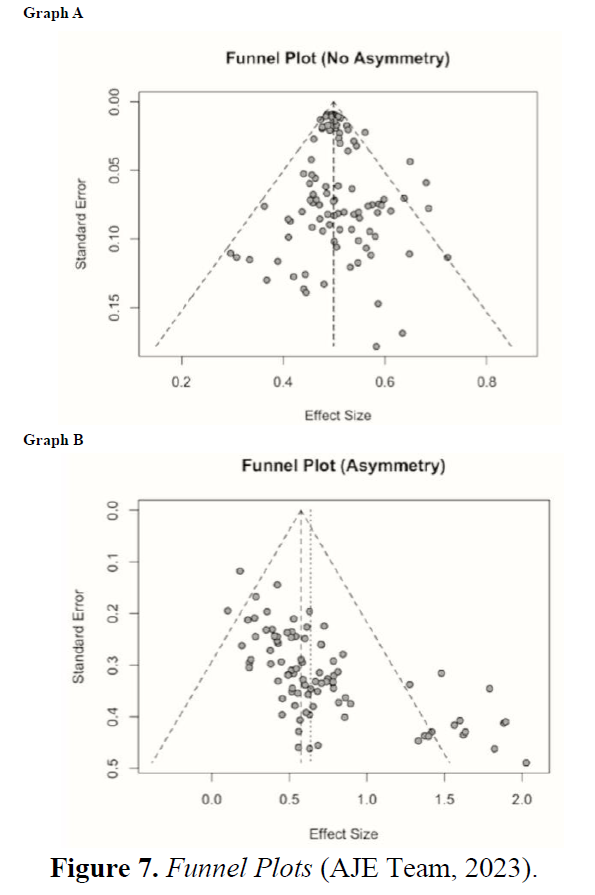

Numerous sophisticated methods with robust theoretical underpinnings have recently been developed to address publication selection bias. These approaches have been validated through extensive Monte Carlo simulations and are applicable in numerous studies. The Trim and Fill technique, Egger's regression test, and the Copas selection model are among these methods. Recent advancements also encompass the management of observed and unobserved systematic heterogeneity within the framework of model uncertainty and certain types of p-hacking*. Together, these method advances constitute essential steps forward in understanding and interpreting contemporary research.

When conducting a meta-analysis, it is crucial to consider various sources of Bias that can impact the study's conclusions. This thorough approach is essential to ensure the validity and reliability of the findings. The common sources of Bias to be mindful of include:

- Selection Bias: This can occur when studies or participants are not selected randomly, leading to a skewed population representation.

- Reporting Bias, also known as publication bias, arises when available results systematically differ from missing results, often favouring significant, positive outcomes.

- Performance Bias and Detection Bias: These biases can affect the implementation and outcomes of interventions in studies, influencing the results.

- Attrition Bias: This Bias occurs when there is a differential loss of participants from the study groups, potentially impacting the validity of the findings.

- Omitted Variable Bias: This Bias can lead to distorted average estimates in a meta-analysis, particularly when correcting for the wrong Bias.

- Publication bias in meta-analyses can introduce a range of potential biases, as demonstrated by the following insights from academic abstracts. These biases, which can significantly impact the validity and generalization of conclusions in the field, are a key research focus.

- Publication Bias Influence: The influence of publication bias on meta-analytic results is a critical issue that cannot be overstated. It can potentially suppress unfavourable studies, thereby biasing results towards artificially favourable outcomes, a concern that research must address.

- Detection Methods: Various statistical tests have been proposed to detect publication bias, but their effectiveness depends on their assumptions about the cause, leading to varying power across different scenarios. Although publication bias is acknowledged in meta-analyses, there is a pressing need for formal assessment and correction of its effects. Currently, only a small percentage of meta-analyses attempt to address publication bias, highlighting the urgency of this issue.

- Impact on Validity: The prevalence of potential publication bias in meta-analyses, particularly in specific disciplines, raises concerns about the validity and generalization of conclusions.

- Methodological Challenges: Standard meta-analysis methods are vulnerable to Bias due to incomplete reporting of results and poor study quality, and there are no clear guidelines for assessing this Bias.

- Test Limitations: Some tests for publication bias, such as Egger's test and weighted regression tests, may have inflated Type I error rates or low statistical power, especially in the presence of heteroscedasticity. The phenomenon happens when research studies with statistically significant findings are published more frequently than those with non-significant results. It is crucial to keep in mind that this could cause an overestimation of the actual effect size.

Following Harrer et al. (2021 and Page et al. (2021), it is important to understand that several other factors can distort the evidence in our meta-analysis. These factors can have a significant impact and include:

- Citation bias occurs when studies with negative or inconclusive findings, even if published, are less likely to be referenced by other related literature. This can make it more challenging to identify these studies through reference searches.

- Time-lag bias: Studies with positive results are often published earlier than those with unfavourable findings. This means that findings of recently conducted studies with positive findings are often already available, while those with non-significant results are not.

- Multiple publication bias: The results of "successful" studies are more likely to be reported in several journal articles, which makes it easier to find at least one of them. Reporting study findings across several articles is also known as "salami slicing."

- Language bias: In most disciplines, the primary language in which evidence is published is English. Publications in other languages are less likely to be detected, especially when the researchers need translation to understand the contents. The possibility of Bias exists when studies in English systematically differ from those published in other languages.

- Outcome reporting bias: Many studies and experimental designs, in particular, measure more than one outcome of interest. Some scientists take advantage of this by only disclosing the results supporting their hypothesis and disregarding those not confirming it. This can also lead to Bias: Technically speaking, the study has been published, but its (unfavourable) result will still be missing in our meta-analysis because it is not reported.

* The manipulation of data analysis until it produces statistically significant results, compromising the truthfulness of the findings